A few years ago, I sat across the desk from a colleague, discussing their vision for a joint AI initiative. As a product manager, I pushed for clarity—what problem were we solving? What was the measurable outcome? What was the why behind this effort? His response was simple: democratization. Just giving people access. No clear purpose, no defined impact—just the assumption that making something available would automatically lead to progress. That conversation stuck with me because it highlighted a fundamental flaw in how we think about democratizing technology.

The term “democratizing” used about technology began to gain traction in the late 20th century, particularly during the rise of personal computing and the internet.

Democratizing technology typically means making it accessible to a broader audience, often by reducing cost, simplifying interfaces, or removing barriers to entry. The goal is to empower more people to use the technology, fostering innovation, equality, and progress.

Personal computers would “democratize” access to computing power by putting it in the hands of individuals rather than large institutions or corporations. Similarly, the Internet would “democratize” access to information by removing the gatekeepers from publishing and content distribution.

By the 2010s, “democratizing” became a buzzword in tech—used to describe making advanced tools like big data, AI, and machine learning accessible to more people. What was once in the hands of domain experts was now in the hands of the masses.

Today, the term is frequently used in discussions about generative AI and other advanced technologies. These tools are marketed as democratizing creativity, coding, and problem-solving by making complex capabilities accessible to non-experts.

The word “democratization” resonates because it aligns with broader cultural values, signaling fairness, accessibility, empowerment, and progress. The technology industry loves grand narratives, and “democratizing” sounds more revolutionary than “making more accessible.” It suggests that technology can break down barriers and create opportunities for everyone.

However, as we’ve seen, the reality is often more complicated, and the term can sometimes obscure the challenges and inequalities that persist. Democratization often benefits those who already have the resources and knowledge while leaving others behind.

I’ve long thought that the word “democratization” was an interesting choice when applied to technology because it resembles the ideals of operating a democratic state.1 Both rely on the idea that giving people access will automatically lead to better outcomes, fairness, and participation. However, both involve the tension between accessibility and effective use, the gap between ideals and reality, and the complexities of ensuring equitable participation. In practice, access alone is not enough; people need education, understanding, and responsible engagement for the system to function effectively.

Democratization ≠ Access

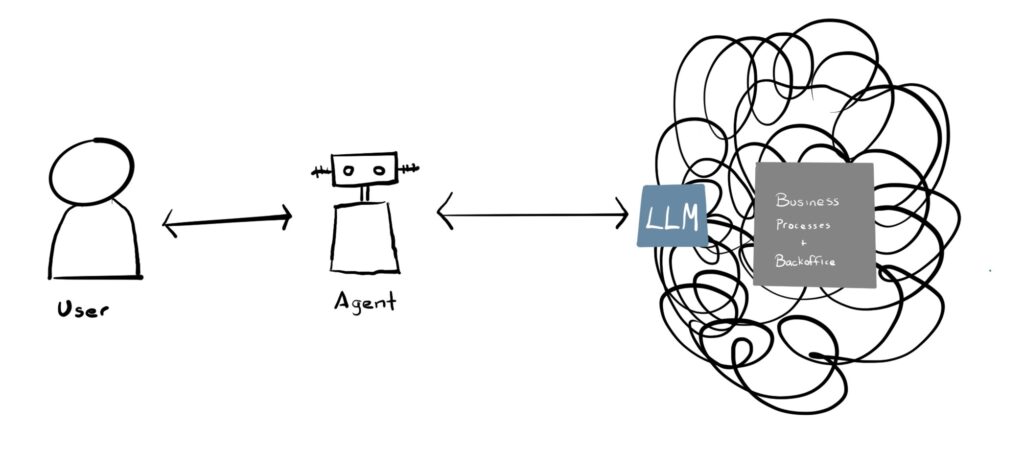

I’ve encountered many leaders who equate democratization with access, as if the goal is to put the tools in people’s hands. However, accessing a tool doesn’t mean people know what to do with it or how to use it effectively. For example, just because people can access AI, big data, or generative tools doesn’t mean they know how to use them properly or interpret their outputs.

Similarly, just because people have the right to vote doesn’t mean they fully understand policies, candidates, or the consequences of their choices.

In technology, access is meaningful only when it drives specific outcomes, such as innovation, efficiency, or solving real-world problems. In a democratic state, access to voting and participation is not an end but a means to achieve broader goals, such as equitable representation, effective governance, and societal progress.

Without a clear purpose, access risks becoming superficial, failing to address deeper systemic issues or deliver tangible improvements. In both cases, democratization must be guided by a vision beyond mere access to ensure it creates a meaningful, lasting impact.

Democratization requires not just opening doors but also empowering individuals with the knowledge, understanding, and skills to walk through them meaningfully. Without this foundation, the promise of democratization remains incomplete.

Democratization ≠ Equality

The future is already here, it’s just not evenly distributed.

William Gibson2

The U.S. was built on democratic ideals. However, political elites, corporate interests, and media conglomerates shape much of the discourse because political engagement is skewed toward those with resources, time, and education. Underprivileged communities face barriers to participation.

The same is true in technology. The wealthy and well-educated benefit more from new technology, while others struggle to adopt it and are left behind. AI and big data were meant to be open and empowering, but tech giants still control them, setting rules and limitations.

Both systems struggle with the reality that equal access does not automatically lead to equal outcomes, as power dynamics and systemic inequalities persist. Even when technology is democratized, those with more resources or expertise often benefit disproportionately, widening existing inequalities.

Bridging the gap between access and outcomes demands more than good intentions—it requires deliberate action to dismantle barriers, redistribute power, and ensure that everyone can benefit equitably. By focusing on education, structural reforms, and inclusive practices, both technology and democratic systems can move closer to fulfilling their promises of empowerment and equality.

Democratization ≠ Expertise

These are dangerous times. Never have so many people had so much access to so much knowledge and yet have been so resistant to learning anything.

Thomas M. Nichols, The Death of Expertise

Critical thinking is essential for both the democratization of technology and the functioning of a democratic state. In technology, access to AI, big data, and digital tools means little if people cannot critically evaluate information, recognize biases, or understand the implications of their actions. Misinformation, algorithmic manipulation, and overreliance on automation can distort reality, just as propaganda and political rhetoric can mislead voters in a democracy. Similarly, for a democratic state to thrive, citizens must question policies, evaluate candidates beyond slogans, and resist emotional or misleading narratives.

Without critical thinking, technology can be misused, and democratic processes can be manipulated, undermining the very ideals of empowerment and representation that democratization seeks to achieve. In both realms, fostering critical thinking is not just beneficial—it’s necessary for meaningful progress and equity.

Addressing the lack of critical thinking in technology and humanity at large requires a holistic approach that combines education, systemic reforms, and cultural change. We can build a more informed, equitable, and resilient society by empowering individuals with the skills and tools to think critically and creating systems that reward thoughtful engagement. This is not a quick fix but a long-term investment in the health of technological and democratic systems.

Democratization ≠ Universality

Both technology and governance often operate under the assumption that uniform solutions can meet the diverse needs of individuals and communities. This can result in a mismatch between what is offered and what is actually required, highlighting the limits of a one-size-fits-all approach.

In technology, for example, AI tools and software may be democratized to allow everyone access, but these tools often assume a certain level of expertise or familiarity with the technology. While they may work well for some users, others may find them difficult to navigate or unable to fully harness their capabilities. A tool designed for the general public might unintentionally alienate those who need a more tailored approach, leaving them frustrated or disengaged.

Similarly, in governance, policies are often created with the idea that they will serve all citizens equally. However, a single national policy—whether on healthcare, education, or voting rights—can fail to account for the vastly different needs and circumstances of different communities. For example, universal healthcare policies may not address the specific healthcare access issues faced by rural or low-income populations, and standardized educational curriculums may not be effective for students with different learning needs or backgrounds. When solutions are not tailored to the unique realities of diverse groups, they risk reinforcing existing inequalities and failing to deliver meaningful results.

The challenge, then, is finding a balance between providing access and ensuring that solutions are adaptable and responsive to the needs of different communities. Democratization doesn’t guarantee universal applicability, and it’s essential to recognize that true empowerment comes not just from providing access but from ensuring that access is meaningful and relevant to everyone, regardless of their context or capabilities. Without this careful consideration, democratization can become a frustrating experience that leaves many behind, ultimately hindering progress rather than fostering it.

Conclusion

The democratization of technology, much like democracy itself, is harder than it sounds. Providing access to tools like AI or big data is only the first step—it doesn’t guarantee that people know how to use them effectively or equitably. Without the necessary education, critical thinking, and support, access alone can be frustrating and lead to further division rather than empowerment.

Just as democratic governance struggles with the assumption that one-size-fits-all policies can serve diverse communities, the same happens with technology. Tools designed to be universally accessible often fail to meet the unique needs of different users, leaving many behind. Real democratization requires not just opening doors but ensuring that everyone has the resources to walk through them meaningfully.

Democracy is challenging in both technology and governance. It’s not just about giving people access; it’s about giving them the knowledge, understanding, and opportunity to use that access in ways that truly empower them.

Until we get this right, the promise of democratization (and democracy) remains unfulfilled.

Footnotes

- The United States of America is a representative democracy (or a democratic republic). ↩︎

- https://quoteinvestigator.com/2012/01/24/future-has-arrived/ ↩︎